An Artificial Intelligence Reckoning in Ad-Targeting

As artificial intelligence systems increasingly mediate our digital lives, we face a critical inflection point: Will we allow the surveillance business model to colonize the AI era?

By Elijah Maubert

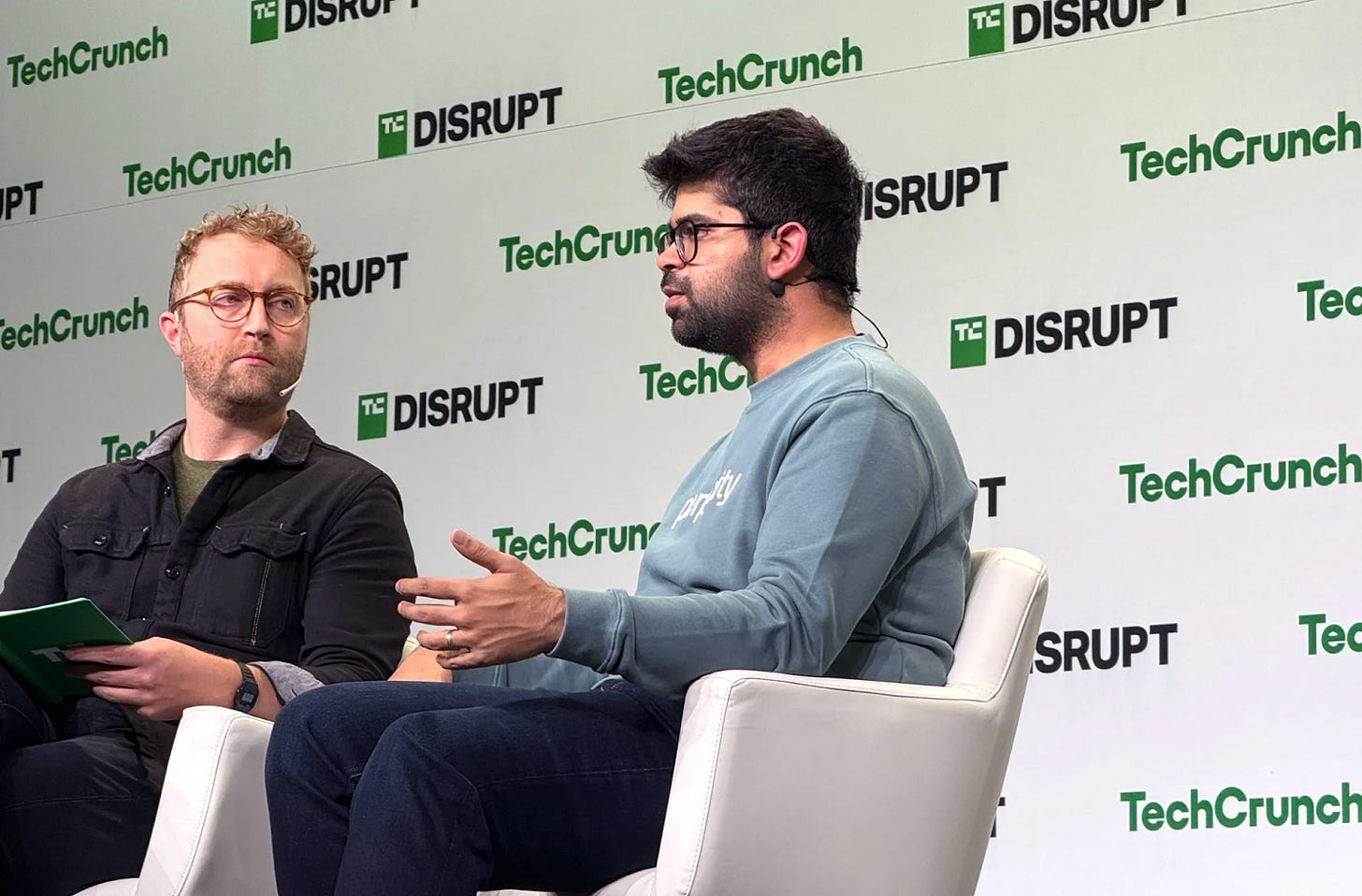

"We're going to track everything you do in our browser so we can sell hyper-personalised ads." When Perplexity AI's co-founder Aravind Srinivas uttered those words on April 24, he wasn't unveiling a novel strategy — He was stating the next development of a business model that has underwritten the internet for a quarter century. The promise of "free" digital services has always been subsidized by a hidden toll: The capture of human attention and the behavioral data that accompanies it. The ad-funded internet was not an inevitability; it was an ideological choice with far-reaching consequences — polarized discourse, precarious digital labor, and a public sphere optimized for agitation. As AI systems progress from predicting to actively shaping behavior, the cost of repeating this mistake will be measured in diminished human autonomy.

In the late-1990s web boom, a consequential decision was made: Information would circulate without monetary cost, with advertising footing the bill. This choice ushered in what law professor Tim Wu calls the attention economy — a marketplace where every publisher, platform, and content creator competes to capture our next millisecond of focus with increasingly potent emotional and cognitive hooks.

Over time, competitive pressure and profit incentives drove platforms to harvest increasingly precise data, tracking our movements, monitoring our moods, even listening to our conversations. The more granular the prediction, the higher the cost. This logic has progressed from knowing behavior to shaping it at scale.

The social costs of this arrangement have become painfully evident. A business model that based on holding attention inevitably rewards outrage, extremism, and compulsive design patterns. Researchers have linked our resulting media diet to concerning trends in adolescent mental health, the viral spread of misinformation, and a precarious gig-economy that shifts economic risk onto workers whose livelihoods depend on algorithmic favor. The true price is paid not at checkout, but in eroded civic discourse and collective well-being.

Artificial intelligence agents dramatically elevate what persuasion technologies can accomplish. Philosopher Luciano Floridi identifies the next frontier as hyper-suasion: AI systems that synthesize vast repositories of personal data to algorithmically tailor messages to our individual psychological susceptibilities, blurring the boundary between recommendation and manipulation. For these systems to function effectively, they require total context — not just what we click, but what we read, purchase, and even whisper to our smart devices. Hence Srinivas's forthright admission that Perplexity's browser will monitor "everything."

If the first Faustian bargain traded privacy for free search, the second risks trading autonomy itself for frictionless AI assistance. When software that drafts our emails, selects our restaurants, or generates our policy documents is financed through behavioral advertising, the incentive shifts from merely predicting human desires to actively shaping them toward profitable outcomes.

We must treat hyper-personalized advertising as we have leaded gasoline: Once considered essential, now recognized as harmful. To accomplish this, policymakers must commit to phasing out individual-level ad targeting, replacing it with contextual or cohort-based approaches that do not depend on comprehensive dossiers of personal behavior. A clear sunset date (such as 2030) creates planning certainty while allowing businesses to transition toward privacy- respecting alternatives, whether subscription-based, contextually targeted advertising, or public-service funding streams.

To prevent today's harms from replicating in tomorrow's AI landscape, this phase out should be paired with a fiduciary duty for any AI agent that harvests broad contextual data. Such a duty—mirroring the obligations doctors or lawyers owe their clients—would legally bind "assistants" to act in users' best interests and prohibit the resale of behavioral data. Violations should trigger class-action eligibility and regulatory penalties proportionate to global revenue, creating meaningful deterrence.

This policy framework aligns well with Europe's risk-tiered AI Act and could integrate seamlessly with Canada's Consumer Privacy Protection Act, potentially establishing a transatlantic standard. It addresses free expression concerns by regulating how ads reach us, not what they contain. By reducing demand for unlimited personal data, policymakers can disincentivize the economic incentive for pervasive surveillance.

Srinivas's candid revelation should serve as a warning. It signals that surveillance capitalism is advancing unchecked into the AI era unless policymakers, investors, and citizens collectively decide otherwise. We rightfully celebrate AI's potential to translate languages, accelerate drug discovery, and address climate challenges. But the fundamental question isn't whether these systems can help us, but rather what they will be optimized to achieve.

By phasing out behavioral targeting and establishing fiduciary guardrails, we can demand an internet—and an AI ecosystem—that transforms human ingenuity, not human vulnerability, into its primary profit center. The technology may be novel, but the lesson is timeless: Revenue models shape technological futures. Change the model, and we change our future.

Elijah Maubert joins the Max Bell School with recent experience at the Macdonald-Laurier Institute, where he contributed to policy research on AI, Technology and Innovation policy. He has recently completed his undergraduate studies at McGill University, focusing on Political Science and the applied philosophy with a thesis on the Ethics of AI. With a strong foundation in both theoretical and practical policy analysis, and a keen interest in the ethical implications of emerging & exponential technologies, Elijah is dedicated to addressing policy challenges at the nexus of technology and society.